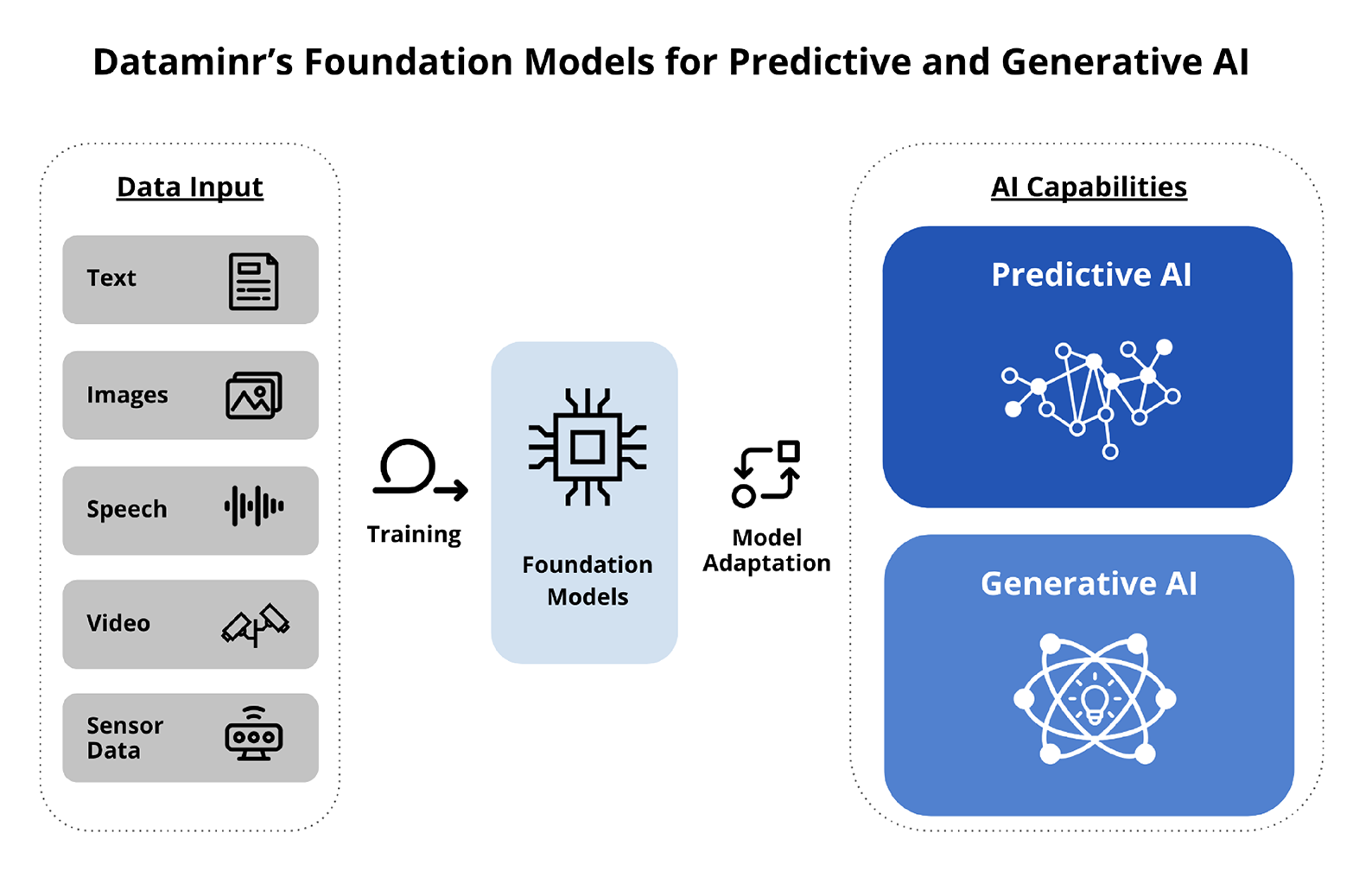

In this second installment of our new AI blog series, I will cover the pillars of Dataminr’s AI strategy for using large language models (LLMs) and Foundation Models. I recently joined Ted Bailey, our Founder and CEO, to outline the company’s exciting vision for Harnessing the Power of LLMs and Multi-modal Foundation Models in Dataminr’s AI Platform. In order to realize Dataminr’s expansive vision, our AI strategy has been to create a broad set of Dataminr-unique, customized Foundation Models and LLMs.

We’ve created customized Foundation Models through several techniques, including both the “fine tuning” of open-source Foundation Models and also through building out our own distinct proprietary models from the ground up. Today, our AI platform includes more than 35 customized Dataminr Foundation Models operating in production in tandem with an extensive array of specialized AI models created by our AI and engineering teams from scratch.

Recently, there has been significant public focus on large scale Foundation Models accessible via APIs, such as GPT-4 (which powers ChatGPT). There is a common misconception, particularly outside of the technical community, that integrating LLMs into enterprise products requires using these mega models.

While off-the-shelf Foundation Models such as GPT-4 can perform well in certain tasks, there are several significant challenges when applying these types of models to specific problem domains. General purpose LLMs are generally extremely large, which means they can be prohibitively expensive to run at scale. And, by design, they’re also generic and broad in nature, often underperforming in terms of accuracy when compared to models built and tailored for domain-specific specialized applications.

Another key limitation of current, off-the-shelf LLMs is that they have training cutoff dates and do not have up-to-date information. Since these models are trained with extremely large amounts of data, it’s not practical, nor desirable, to continuously re-train them. In addition, customization options for off-the-shelf models are limited (e.g., Prompt Engineering), making it virtually impossible to truly optimize these models for the highest performance levels at scale.

For all the reasons above, there is a growing consensus in the technical community that for B2B enterprise applications with specialized use cases, the process of customizing Foundation Models is essential to achieve the desired performance levels (e.g., model accuracy, detection and summarization latency, and cost effectiveness). Taking this approach is also critical to ensuring that the necessary controls are designed into the applications.

Therefore, customizing a broad set of Foundation Models and LLMs for specific tasks—rather than just relying on a single, very large off-the-shelf LLM model—is increasingly viewed as a requirement for real world applications that effectively address a specific set of domain-specific problems for enterprises and government organizations.

The challenge, however, with this approach is that it is far more difficult to implement. For new startup companies, it can be nothing short of impossible. Customizing LLMs in production for large-scale use cases and applications requires three key ingredients:

- A vast archive of domain specific proprietary data

- World class AI prowess

- An AI platform architecture to streamline all aspects related to experimentation, fine-tuning, optimization, deployment, and monitoring of the models

Practically speaking, having these three elements requires substantial upfront platform investment, years of platform development and architecture work, and access to an extensive archive of proprietary domain-specific data.

For Dataminr, the cornerstone of customizing Foundation Models is our unmatched proprietary data and event archive which we have been building for more than a decade. The 35-plus customized LLMs and Foundation Models we’ve operationalized in production have been tailored to our use cases with our archive. This is the fundamental reason why Dataminr’s Foundation Models excel at performing the specific range of tasks involved in the discovery, categorization, contextualization, and summarization of real-time events, risks, and critical information.

Dataminr’s unique 10-plus year archive is multidimensional. It is continually updated in real time and spans:

- All the events, risks and information our AI platform has identified to date

- Data streams from nearly one million public data sources

- A vast archive of human-AI feedback loop annotations from domain experts

When a new pattern in public data is spotted, our AI platform can instantaneously leverage the archive, recognizing similarities and differences by cross-comparing an emerging pattern with all those recorded before. In essence, our AI platform can dynamically use the multi-dimensional contours of the past to more accurately predict the nature of the present. Leveraging our unique archive enables Dataminr to create domain and task-specific customized Foundation Models that are purpose built, and that can’t be replicated by anyone else.

Dataminr has another distinct proprietary data advantage when deploying LLMs and Foundation Models. We have also spent years building an expansive Knowledge Platform that includes a proprietary custom Knowledge Graph of millions of public and private sector entities and relationships between those entities. Using Foundation Models in conjunction with our Knowledge Platform has further enhanced performance and allowed Dataminr to ensure that we deliver the most up-to-date, relevant and actionable alerts to our customers. Additionally, integrating this Knowledge Platform of fixed domain-specific referential points has also allowed us to mitigate the propensity for Foundation Models to sometimes “hallucinate”—one of the growing challenges faced with LLMs.

Since the launch of our first LLM-based models in 2020, our world-class AI and engineering teams (including more than 20 Ph.D.s in AI) have worked across all aspects of our platform to increase its ability to scale the customization of Foundation Models. We’ve made platform architectural decisions to more easily integrate and deploy AI models, and we’ve created agile experimentation frameworks and processes that allow us to quickly train, test, evaluate, and monitor AI models before launch, as well as while they run in production.

Recently, we’ve expanded our efforts to formalize these steps in LLMOps: the practices, techniques, and tools required to operationalize LLMs in production at a very large scale. This robust process includes optimization of models to run faster at lower cost, at scale, on specialized hardware accelerators. We’ve built new AI creation and experimentation tools that include datasets and workflows for model creators, and internal model service APIs and dashboards that allow for continuous monitoring of model performance.

Taking these steps has resulted in a rapid cycle of Foundation Model customization, testing and integration. Streamlining LLMOps is critical because different LLMs and Foundation Models perform differently on different tasks, and each Foundation Model has to be uniquely customized based on the model’s strengths and weaknesses. This requires creating and managing many benchmarks, metrics, and experiments to optimize each model’s performance and performance of the platform as a whole on an ongoing basis.

The combination of LLMOps with Dataminr’s historical 10-plus year archive makes Dataminr’s AI platform truly unique and unparalleled. Our unmatched archive empowers Dataminr to create proprietary, customized Foundation Models that are purpose built. We continue to deliver highly valuable signals to our customers that no other company can replicate, while further accelerating our AI platform’s uncatchable advantage.

Stay tuned for more AI blog posts.